The past five years in AI have unfolded like a game of Civilization. Empires rise, expand, and occasionally get blindsided by newer, faster adversaries. In this landscape, the frontrunners are research labs and tech giants pushing the boundaries of what’s possible, while the fast-followers rapidly close the gap through scale, open-source, and execution.

This entry breaks down the AI race into four key categories:

- Language Models

- Multimodal Models

- Agent Frameworks

- Infrastructure & Tooling

What Are Fast-Followers?

“Fast followers” is a business strategy where a company observes the innovations and successes of a competitor (the “first mover”) and then quickly adopts and improves upon those innovations. Essentially, they capitalize on the first mover’s efforts and investments by learning from their experiences, including their successes and failures.

The Fast Follower Advantage allows companies to:

- Benefit from the first mover’s research and development.

- Refine and improve upon existing products based on market feedback.

- Enter the market with a more mature and potentially better offering.

- Leverage existing technologies and infrastructure.

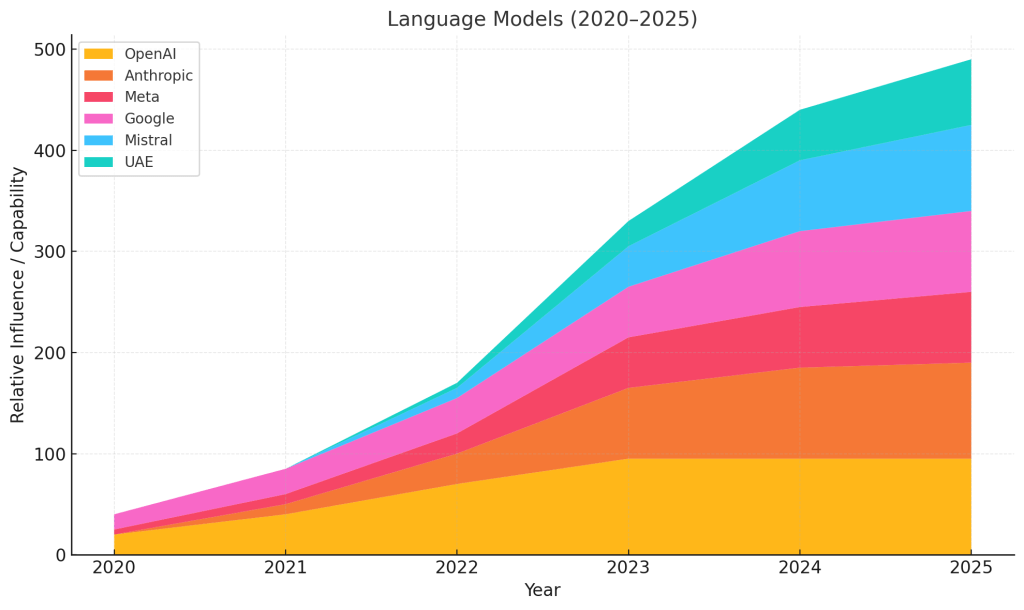

📘 1. Language Models

The foundational battleground of generative AI, this space saw massive model scaling and fine-tuning over five years.

🏆 Leaders:

- OpenAI — GPT-3, GPT-4, GPT-4o. First to instruction tuning, plugin support, and real-world conversational UX via ChatGPT.

- Anthropic — Constitutional AI and long-context Claude models challenged OpenAI with safety-centric design.

- Google DeepMind — Released PaLM and Gemini 1.5, closing the reasoning gap.

⚡ Fast-Followers:

- Meta — LLaMA 1, 2, 3; the open-source counter to closed frontier models.

- Mistral AI — Efficient, high-performance open-weight models.

- UAE’s Falcon — Strong open-source challenger from the Middle East.

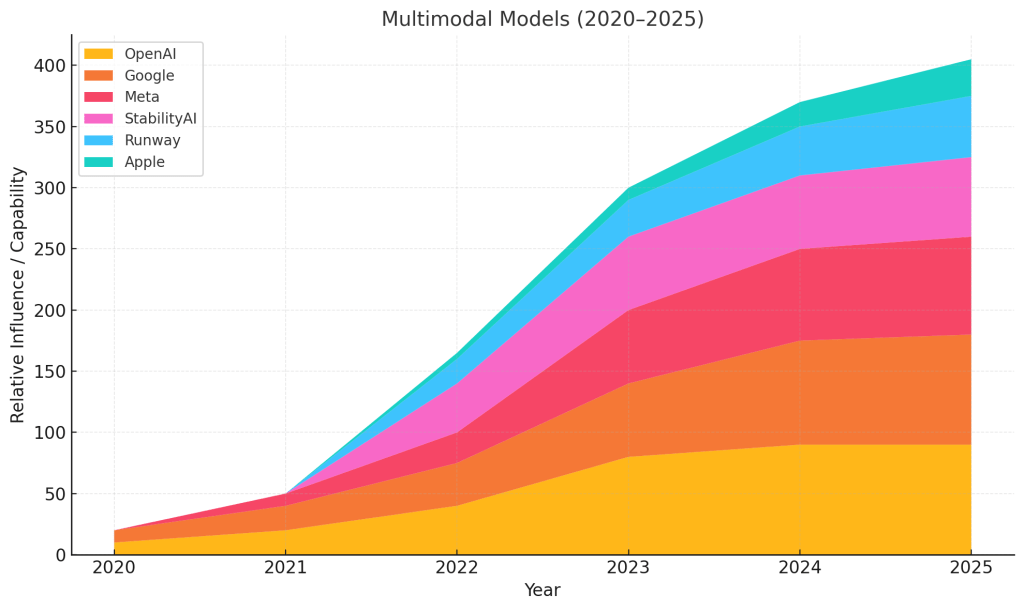

🎨 2. Multimodal Models

From text-to-image to cross-modal embeddings, this space matured into vision, speech, and audio intelligence.

🏆 Leaders:

- OpenAI — DALL·E 2, Whisper, and GPT-4o integrated image and audio reasoning.

- Google — Imagen, MusicLM, Gemini. Native multimodality and research excellence.

- Meta — Segment Anything (SAM), Make-A-Video, and ImageBind.

⚡ Fast-Followers:

- Stability AI — Stable Diffusion unlocked open-source text-to-image.

- Runway — Tools for creators using video and image synthesis.

- Apple — Focused on on-device multimodal processing (limited public visibility).

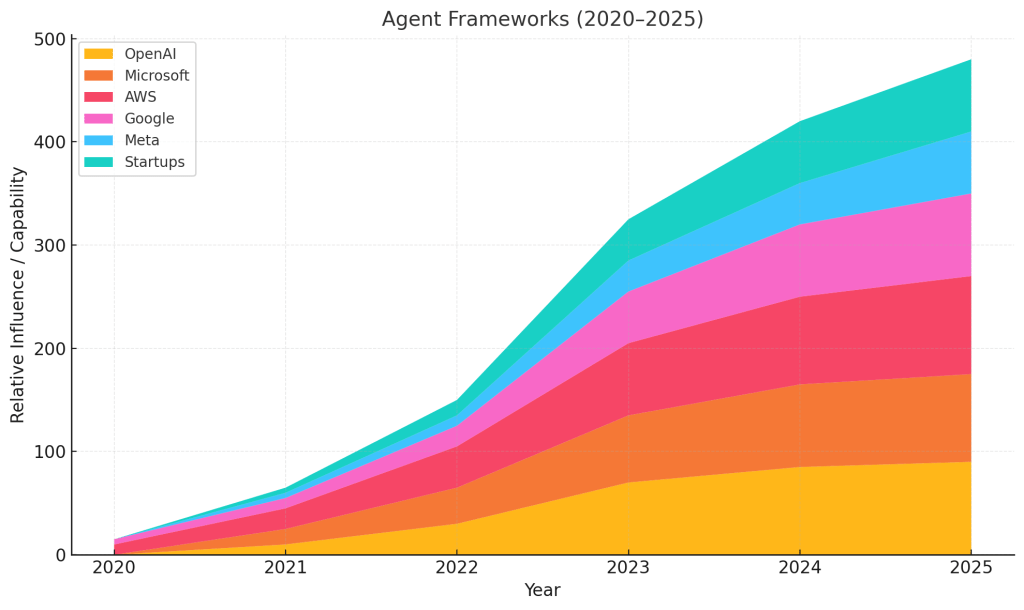

🤖 3. Agent Frameworks

The age of static models is fading. Agentic AI—models that plan, call tools, and act—is here.

🏆 Leaders:

- OpenAI — Pioneered function calling, plugins, memory.

- AWS —

- Amazon Q (developer assistant + enterprise search)

- Q CLI with MCP

- Strands for multi-agent coordination

- Q IDE plugins and integrated workflows

- Microsoft — HuggingGPT demo, Office Copilot deployment

⚡ Fast-Followers:

- Google — Integrated Gemini with APIs and memory tools.

- Meta — Early-stage experimentation.

- Open-source startups — Auto-GPT, CrewAI, LangChain.

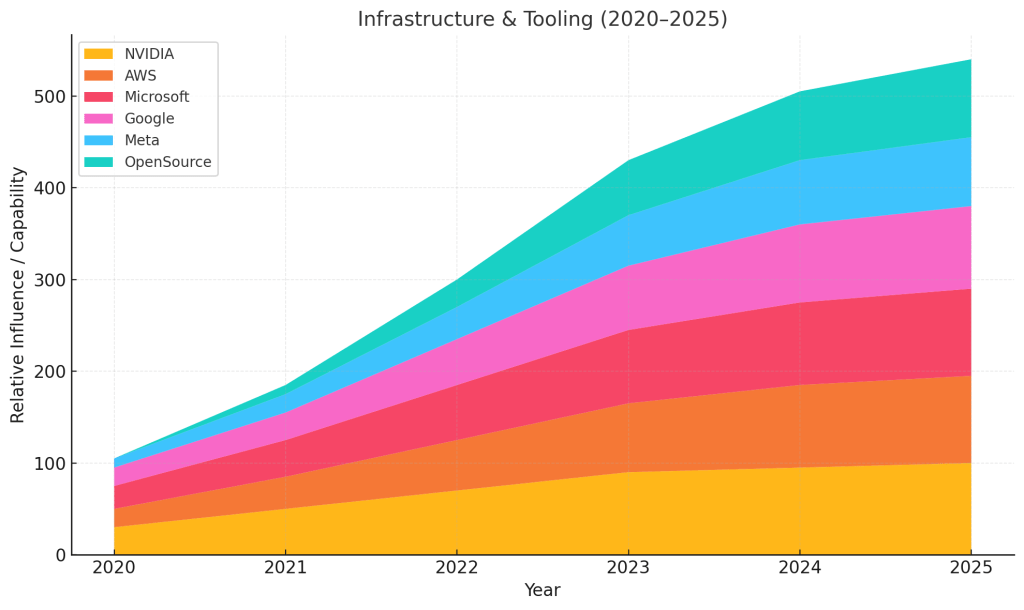

🏗️ 4. Infrastructure & Tooling

The arms and roads behind the empire. Compute, accelerators, and dev stacks fuel all AI progress.

🏆 Leaders:

- NVIDIA — A100 and H100 GPUs dominate AI compute.

- AWS — Bedrock, Inferentia, Amazon Q stack, Q CLI, Strands, Claude integration.

- Microsoft Azure — Hosted OpenAI infra, enterprise-ready services.

⚡ Fast-Followers:

- Google Cloud — TPUs, Vertex AI.

- Meta — Internal RSC supercomputer.

- Hugging Face, EleutherAI — Open tooling and model accessibility.

Final Thoughts

Whether it’s agents or infrastructure, this race is accelerating. No single “civilization” will rule alone—the future is likely to be a hybrid of open and closed systems, deeply integrated with toolchains and business logic.

The question is no longer “Who will build AGI first?” but “Who can orchestrate all the parts best—at scale, securely, and for everyone?”

Leave a comment