The era of AI agents is evolving fast. What began as simple tool-calling pipelines is now blossoming into fully orchestrated, adaptive agentic systems. This article traces that journey—from the earliest LangGraph implementations to the rise of Amazon’s Strands and AgentCore, and grounds it in the powerful agent-building principles outlined by Anthropic in their Building Effective Agents blog and summit talk.

🛠️ LangGraph: The First Blueprint for Agent Workflows

LangGraph, a project within LangChain, was one of the earliest and most influential tools for creating agent-based applications. It introduced a graph-based runtime for chaining multiple LLM calls and tool invocations in a structured workflow.

- LangGraph allowed developers to model stateful agent behavior through directed graphs—where nodes represented steps like tool calls, LLM generations, or conditional logic.

- The early LangChain Concepts and LangGraph Introduction helped developers build agents that could reason over tasks, call external tools, and remember context over multiple steps.

- LangGraph emphasized asynchronous execution, making it suitable for real-world pipelines where long-running tools and retries were common.

With this, developers could build structured agents—but the reasoning was still mostly linear. What followed was a jump in flexibility.

🔄 Strands + Interleaved Thinking: Adaptive Intelligence for the Enterprise

As enterprise demands for dynamic reasoning grew, rigid workflows began to break. That’s where AWS Strands entered the scene.

Strands introduced a model-driven orchestration layer, allowing developers to declaratively define tools and let the LLM orchestrate which ones to use, in what order, and with what reasoning.

🔍 Key Feature: Interleaved Thinking (via Claude 4)

As described in this post, interleaved thinking allows agents to:

- Reflect and adapt between each tool call

- Pivot based on unexpected results

- Build contextual intelligence, not static summaries

What is Interleaved Thinking, really?

At a high level, most traditional agents follow a simple loop:

Think once → Call a tool → Respond.

But this model is brittle. If the first thought is wrong, everything downstream falls apart.

Interleaved Thinking, as enabled by Claude 4, takes a more human-like approach:

Think → Act → Reflect → Adjust → Repeat.

Instead of deciding everything up front, the agent pauses after each tool call to reconsider. It reflects on what it just learned and chooses the next step accordingly.

🧠 Simple Example:

Imagine you ask an agent: “Why is Tesla’s stock dropping?”

Without Interleaved Thinking:

- It might call a finance API.

- Summarize the result.

- Give you an answer in one shot.

With Interleaved Thinking:

- Agent checks Tesla’s earnings report.

- Sees margins shrinking.

- Decides to compare margins with BYD and Rivian.

- Notices competitive pricing trends.

- Shifts focus to Chinese EV subsidies.

- Synthesizes a broader story with economic and geopolitical context.

Each step adjusts based on the previous one — like a detective following leads instead of reciting facts.

This leads to:

- Smarter, more relevant answers

- Agents that pivot their strategy based on intermediate results

- Better handling of complex, multi-step reasoning

🔁 Traditional Tool Use vs 🤖 Interleaved Thinking

| 🔍 Category | 🟥 Traditional Tool Use | 🟦 Interleaved Thinking |

|---|---|---|

| Planning | 🟧 Initial Planning Plan all queries – Tesla data, competitors, market analysis | 🟧 Strategic Planning Start with Tesla’s latest earnings for context |

| Initial Querying | 🌐 Web Search Tesla Q3 2024 earnings report | 🌐 Tesla Q3 Search Focus on key metrics and challenges |

| Early Analysis | 📊 Financial API Get Tesla stock performance Q3 | 📊 Tesla Analysis Strong delivery growth, but margin pressure noted |

| Comparative Search | 🌐 Web Search Ford, GM, Rivian Q3 earnings | 🌐 Targeted Search Focus on margin strategies of competitors |

| Competitor Data | 📊 Financial API Competitor stock data | 🧠 Pattern Recognition Industry-wide margin squeeze, need pricing data |

| Market Trends | 🌐 Web Search EV market trends Q3 2024 | 📊 Pricing Analysis EV pricing trends and margins |

| Computation | 🧮 Calculator Performance comparisons | 🧠 Market Dynamics Tesla’s scale advantage vs traditional auto struggles |

| Synthesis | 📑 All Results Raw data from all sources | 🔮 Forward Looking Q4 guidance and 2025 outlook |

| Final Analysis | 🟩 Basic Analysis Surface-level comparison report | 🟩 Strategic Analysis Deep insights with future implications |

This combination—Strands + Claude 4’s interleaved thinking—delivers agents that aren’t just smart, but strategic. It moves us from chatbot workflows to true adaptive systems.

🤖 Anthropic’s Framework: Build Agents That Think

Anthropic’s Building Effective Agents post and Barry Zhang’s talk are essential guides for anyone serious about agent design.

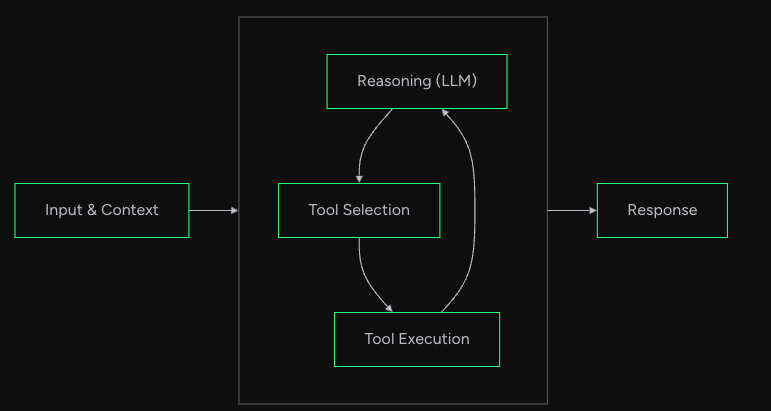

Their 3 core ideas:

- Don’t build agents for everything – Use workflows when possible; reserve agents for ambiguous, high-value tasks.

- Keep it simple – Agents are just:

- A system prompt

- A set of tools

- An environment loop

- Think like your agent – Debug and design from the agent’s context window (10k–20k tokens).

They emphasize:

- Starting minimal before reaching for LangGraph or Strands

- Iterating on environment, toolset, and prompt first

- Using Claude itself to analyze agent decisions and trajectory

“Try doing a task using only a screenshot and a vague description—that’s what your agent experiences.”

🧠 Anthropic’s Multi-Agent Research System: The Next Evolution

Anthropic has also revealed how they scaled Claude into a multi-agent research system to tackle more complex, open-ended problems. This architecture marks the next evolutionary step beyond single-agent loops:

- The system includes a lead agent responsible for planning and synthesis.

- It dynamically spawns worker agents, each tasked with a specific subgoal and set of tools.

- The lead agent integrates their outputs into a unified response.

🔄 Benefits:

- Supports parallel research on subtopics

- Protects each agent’s context window from bloat

- Reflects real-world team dynamics (delegation, specialization)

⚠️ Tradeoffs:

- Uses 10–15x more tokens than single-agent systems

- Needs thoughtful cost/latency budgeting

📡 Open Question: How should agents communicate with each other asynchronously? Most current systems (including Claude) rely on rigid user/assistant chat turns. Multi-agent futures demand richer, role-based protocols.

This approach aligns closely with Strands orchestration + AgentCore execution, pushing toward full-spectrum agent ecosystems.

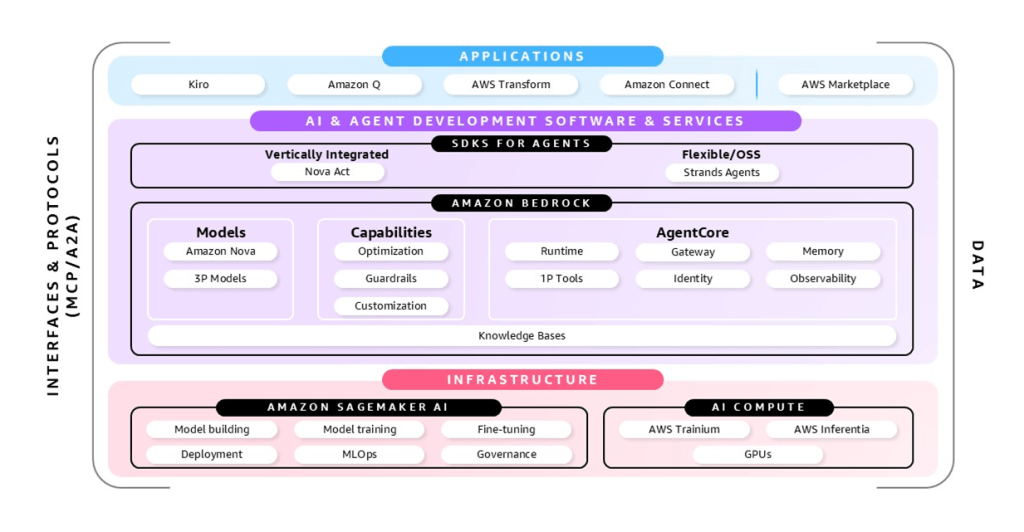

⚙️ Agentic AI & AgentCore: From Playbooks to Production

The next step in the evolution is AgentCore, a new framework from AWS for deploying production-grade AI agents at scale. Amazon Bedrock AgentCore, is a set of services designed that make deploying and operating AI agents at scale both feasible and secure. AgentCore addresses the fundamental challenge: taking intelligent, autonomous systems beyond proof-of-concept and into production.

Why We Need AgentCore

AI agents reason, plan, and adapt dynamically—unlike traditional static applications. But deploying them requires managing scalability, memory, security, interoperability, and observability in entirely new ways. Without dedicated infrastructure, companies risk re-inventing tooling per project. AgentCore changes that.

Read more here: AgentCore Overview

- Documentation: AgentCore Docs

- GitHub samples: AgentCore Examples

🔧 What AgentCore Enables:

- Flexible agent loop orchestration with state management

- Event-driven execution and control hooks

- Integration with tools, APIs, and RAG systems

- Compatibility with AWS Bedrock for deploying Claude, Titan, and other models

AgentCore builds on everything LangGraph and Strands pioneered—but layers in real-world engineering concerns: logging, fault tolerance, scaling, and guardrails.

📌 Final Thoughts: How to Choose

| Use Case | Recommended Approach |

|---|---|

| Linear, predictable tasks | Workflows (LangChain, direct calls) |

| Complex, tool-rich tasks | LangGraph or early agent loops |

| Adaptive, strategic reasoning | Strands + Claude 4 interleaved thinking |

| Multi-agent collaboration | Claude multi-agent research pattern |

| Enterprise-scale deployment | AgentCore on AWS |

This journey—from LangGraph to Strands to AgentCore to multi-agent systems—is a masterclass in how fast agentic AI is maturing.

Anthropic’s clear mental model gives me the right principles.

Strands gives me strategic adaptability.

Multi-agent orchestration brings modular scale.

And AgentCore gives me production muscle.

We’re not just calling tools anymore.

We’re designing systems that think—and collaborate.

Leave a comment